Guardian Ambient Headline Radio - The Definitive Blogpost

I've written up about the GAHR before, but now that it's finished (well for the moment anyway) I wanted to put it all down in one place, sorry this is a long one, you may want to skip to the part you're interested in...

If you're on Chrome or Firefox (the only things I've tested it on) you can listen/see the project here: revdancatt.com/projects/CAT508-guardian-ambient-headline-radio.

It should play seamless looping sounds and read headlines at you. Doesn't seem to work on Safari at all,

because Safari hates the <audio> tag.

* * *

THE VISION

It started just over a year ago, before the last SxSW (2011), the Guardian was going & internally we were asked for suggestions for what we could do there, that demonstrated our internet/data/hacking/open/api skillz. I think we had a room to show our stuff, one reaction I normally have when faced with a space to fill, is to fill it with speakers. The bigger and more fuck-off the better.

A room, with a a couple of laptops running Ableton Live with various pad/soundscapes running through them. Also, have it wired up to the Guardian API picking up the latest articles, videos and podcasts. Then, converting those articles into speech, auto-tune it and then drop it into the mix, changing the levels on the soundscapes to match the "mood" of the article, or the section it was from. So "sporty" background sounds for sport stories, "filmy" background sounds for film stories and so on. While cleverly and automatically chopping up the podcasts to drop soundbites and loops into the mix to.

Meanwhile our reporters would go round interviewing people and bands (from the music bit) and general ambient field recordings; out in the street, in the crowd and so on. Which they could also download to another laptop in the room, which would again feed bits and pieces into the mix.

All this would then be broadcast on the internet 24 hours a day for the full duration of the SxSW festival(s). An evolving news soundscape, that could happily sit in the background but still keep you informed of roughly what was going on if you paid attention to it.

* * *

This browser-based end result is sort of that.

Oh of course, I had no idea how to use Ableton Live, and so began the year of learning.

In-between then and now a couple of things happened

One was a hack-day at work where I threw some of the code running live through Ableton, and the other was leaving it running over a weekend. Here's a video from the hack-day, where I point at various things...

And the audio on SoundCloud so you can hear how it compares to the javascript version.

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

* * *

SOUND DESIGN

Actually, I expect some influence for the project comes from way, way back, specifically these two tracks...

Vangelis: Albedo 0.39

Vangelis: Intergalactic Radio Station

...for those without embeds, here are the links...

...which I kind of fell in love with when I was a teenager. This idea of future radio, soundscape, spoken word over the top and so on.

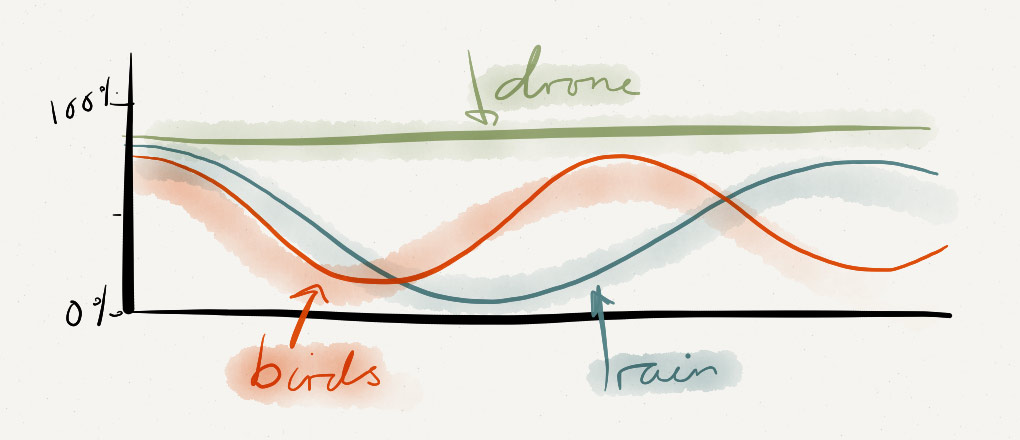

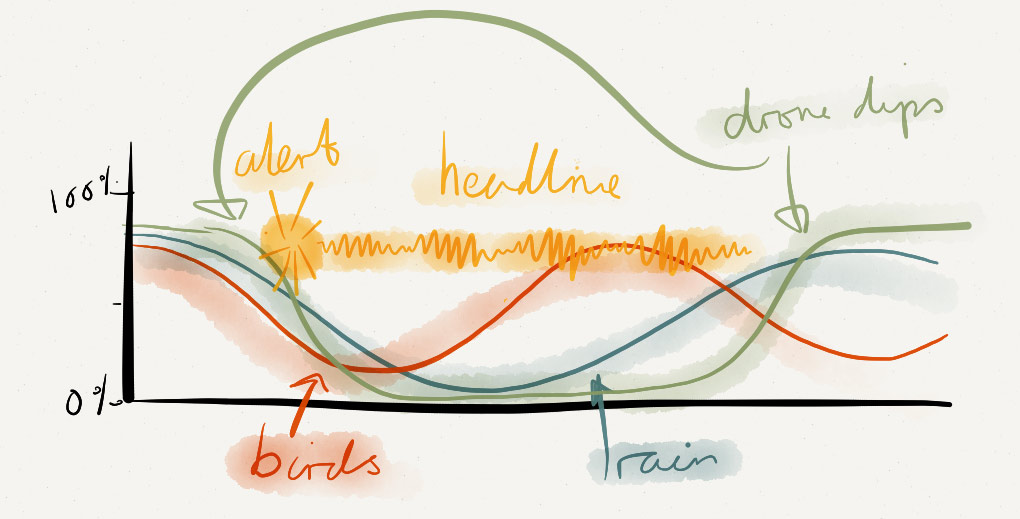

So I wanted an evolving soundscape that mutated based on the current headline's section, or several soundscapes that I could blend between. But for this I have just the one made up of three layers, all produced by Native Instruments' SpaceDrone

SpaceDrone is one of the three factory defaults that ships with the free version of the REAKTOR player (you can grab it here)

The layers are...

-

A background ambient drone, the "A Planet Called Earth" Snapshot.

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

-

The rain/"white noise", unsurprisingly "PouringRain" Snapshot.

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

-

And bird "tweets", again unsurprisingly "Birds" default.

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

* * *

And the code controlling that basically performs a sin calculation based on the number of seconds since the start of 1970 (the birth of time for computers)...

setInterval(function() {

var d = new Date();

var v = Math.sin(d.getTime()/32127)/2+0.5;

$('#birds').get(0).volume=v;

v = Math.sin(d.getTime()/25000)/2+0.5;

$('#rain').get(0).volume=v*0.8;

}, 1000);The results of a sin give us a value between -1 and 1. Obviously we only want positive numbers, negative volume is no good to us. To shift everything up into positive numbers we dividing by 2 (giving us -0.5 to 0.5) and finally add 0.5 moves everything up.

In the above code I'm knocking back the rain a bit by multiplying the volume by 0.8

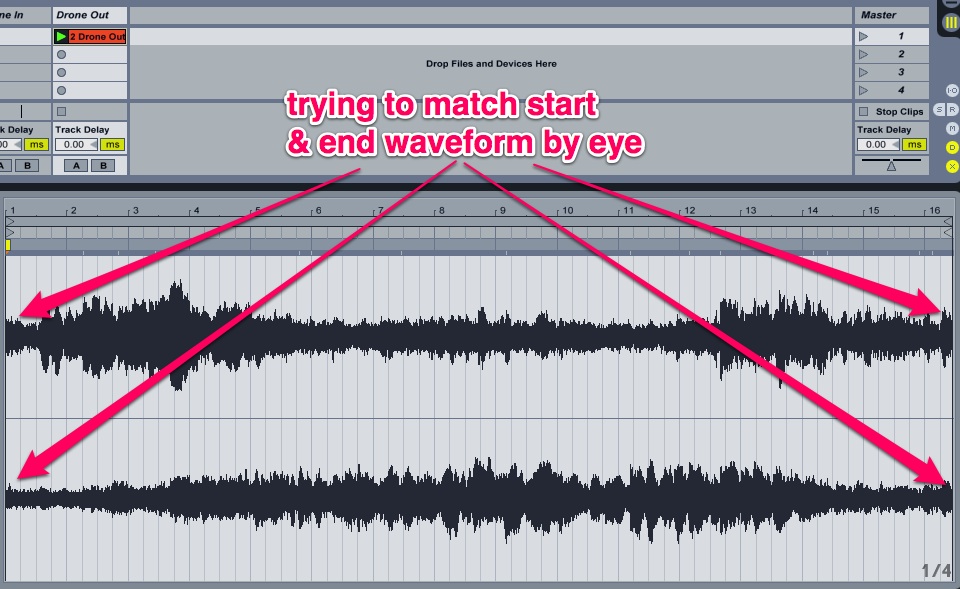

And lastly because the main background ambient sound is being recorded directly from SpaceDrone and is evolving with sweeping pads rather than a simple looping sample I had to make it loop (the rain and birds loop just fine without any work).

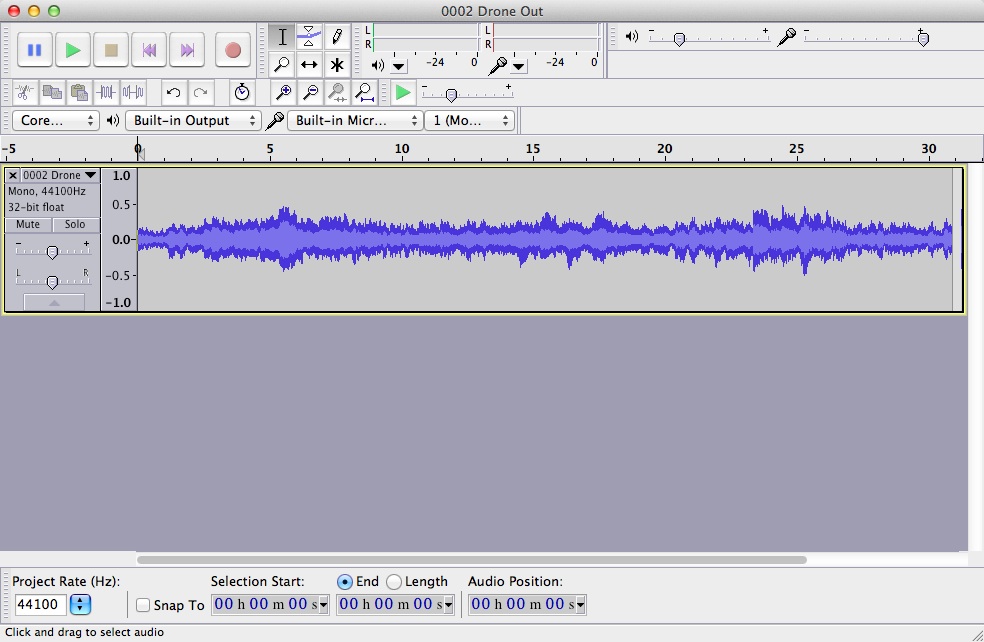

Here's the waveform of the background as recorded into Ableton Live. As you can see it's recorded in stereo and some bits are louder than others. To increase the chances of getting a good loop starting position I recorded until it looked like the volume of each channel roughly matched the starting volume...

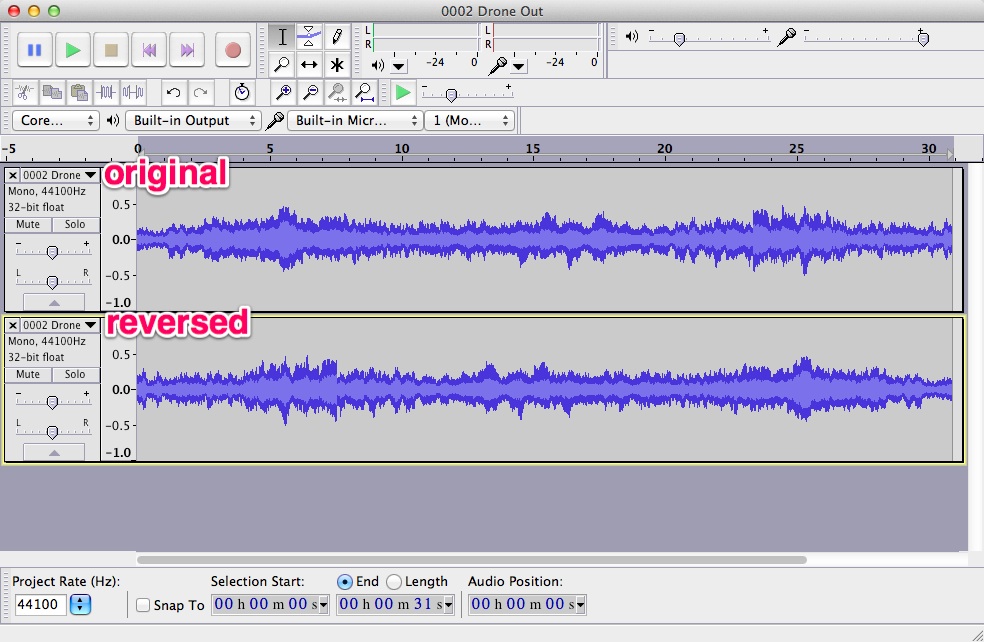

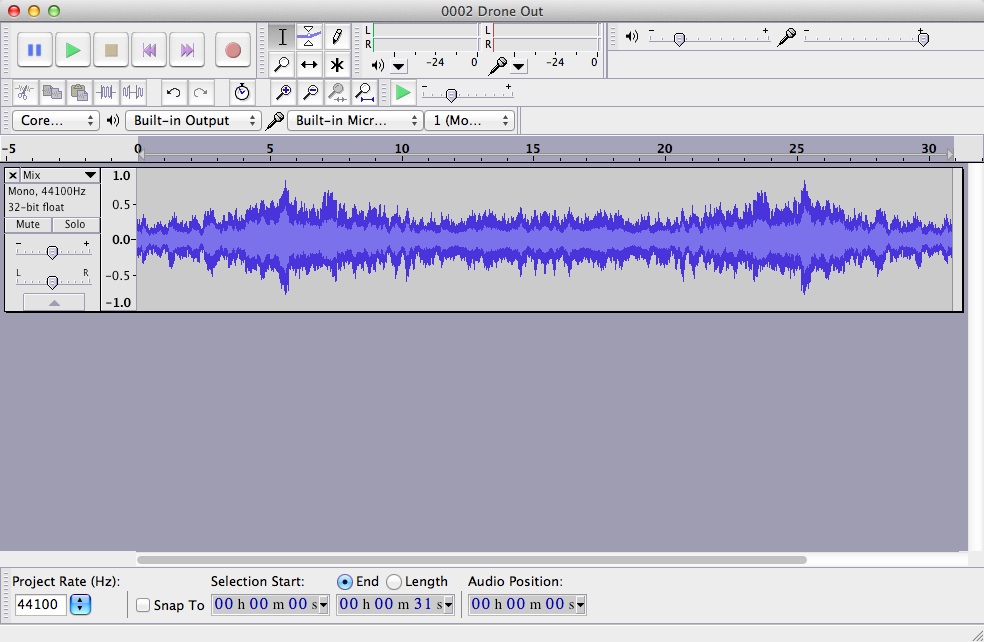

To make the loop I could have just stuck a reversed copy onto the end, so it'd play forwards and then backward, which would make the whole thing loop. But I didn't want to double the length of the sample or the size of the file.

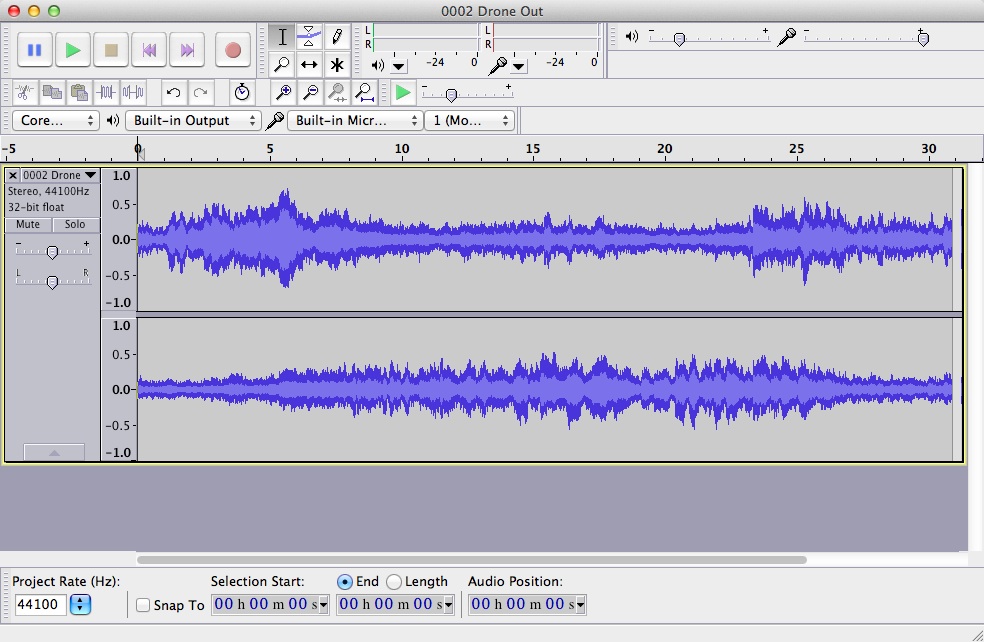

Instead I took it into Audacity...

Converted it from Stereo to Mono...

Duplicated the track and reversed it...

And then finally mixed it down to a single track that would have a seemless loop, which is mirrored, but the original length...

Speaking of loops...

OGG v MP3 and seamless loops

Here are the three soundscape components in <audio> tags on the page.

<audio id="drone" width="100%"

class="player" src="snd/drone.ogg"

loop="loop" autoplay="autoplay"></audio>

<audio id="rain" width="100%"

class="player" src="snd/rain.ogg"

loop="loop" autoplay="autoplay"></audio>

<audio id="birds" width="100%"

class="player" src="snd/birds.ogg"

loop="loop" autoplay="autoplay"></audio>As you can see they are all set to loop & autoplay. I found with MP3s there were gaps in the looping and reading up various bug reports about this suggested three things...

- because of the way MP3s are decoded its quite tricky for the browser to seamlessly loop them (although I'm not clear why this should be).

- Write various event checking hacks to try and force a better loop or bounce between two samples, starting one when the other finished.

- Use OGG instead.

Because I really want to get behind the <audio> & <video> tags and believe that they should (and one day will) just work without any hacks or work arounds I've stubbornly used OGG and nothing else to make sure the user gets a good audio experience.

In my experience the looping is better, but gaps start to appear if you have other things going on the computer or in many other tabs. One day all this will magically just work!

The last two sound elements

With all the background sound out of the way there are two more (well three I guess) audio elements.

Because this is supposed to be ambient and just running the the background, your mind isn't really paying attention to it. Meaning that when a new headlines arrived if it just launched into speaking you miss most of what was actually said. This isn't helped by the Text-to-Speech being pretty hard to understand anyway.

I tried to fix this by taking a couple of seconds to fade out the ambient background before speaking the headlines. The fading gives your brain a cue that something is about to happen, as well as making it easier to hear the speaking over the top of the background sound.

Turns out it still wasn't enough to prepare you to hear the headline.

So I added two more things. An alert before the headline to let you know one was about to appear, and the Text-To-Speech to start with the section that the headline was coming from, ie. 'Sport - some footballer just did something'. Not because I particularly cared for the section, but rather to have one or two words at the start that the brain could throw away while tuning in and it not to matter too much.

But because this is supposed to be ambient, the alert wasn't to be too alerty, so you could just ignore if (and the headline) if you wanted to. Basically a not very alerting alert.

For no better reason than just to amuse myself I took the three tone xylophone Bing-Dee-Bing noise from the 80s BBC comedy program Hi-De-Hi. This is very much like the Ding-Dong sounds you get before airport and train station announcements, but with more knowing irony.

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

For anyone my age in the UK who wasn't allowed to watch ITV because of the adverts the "Bing-Dee-Bing, Hello Campers" (as seen at the start of this YouTube clip right after the credits) represents the age at which our uncomfortable understanding of things that are supposed to be funny being deeply unfunny and yet unable to escape it, begins to develop.

"Bing-Dee-Bing" (the first 3 seconds of this...)

Last but not least, sometimes it can be a long time between headlines. This is the point at which I begin to wonder if something has gone wrong with my code and it has stopped updating or goodness knows what. To put my mind at rest, if after 7 minutes there are no new headlines it plays a little jingle, and gives a "You're listening to Guardian Ambient Headline Radio" ident.

Instead of the alert, I opted for a "jingle" kind of sound, something newsy and more upbeat than the ambient sound. Hopefully it wouldn't play too often, because if it did it meant that the Guardian itself was broken :)

Note: this audio which would have been on SoundCloud has now vanished to the linkrot of time.

The ambience fades, the jingle kicks in, ident, and then back to ambience.

I think that's all for the sound. Probably need to add a volume control at some point too.

* * *

TEXT TO SPEECH

The text to speech is provided by speak.js which is a javascript implementation of eSpeak the open source speech synthesizer. The file itself though is 2.3MB, not really something you want to throw at a project just because having some extra functionality would be nice.

In this case it was the main premise for the project so no big deal.

I did have a quick look round at web services that'd take a chunk of text and turn it into a better quality mp3 file but nothing really took my fancy.

And, in theory I could recompile the speak.js to use a different, slightly more defined voice but following the instructions made my head hurt :)

Anyway, the implementation is really easy and I just had to add one hook to detect when the audio had finished playing (it creates an <audio> tag on the fly, so I just added a line directly afterwards attaching the "end" event).

* * *

GRAPHIC DESIGN

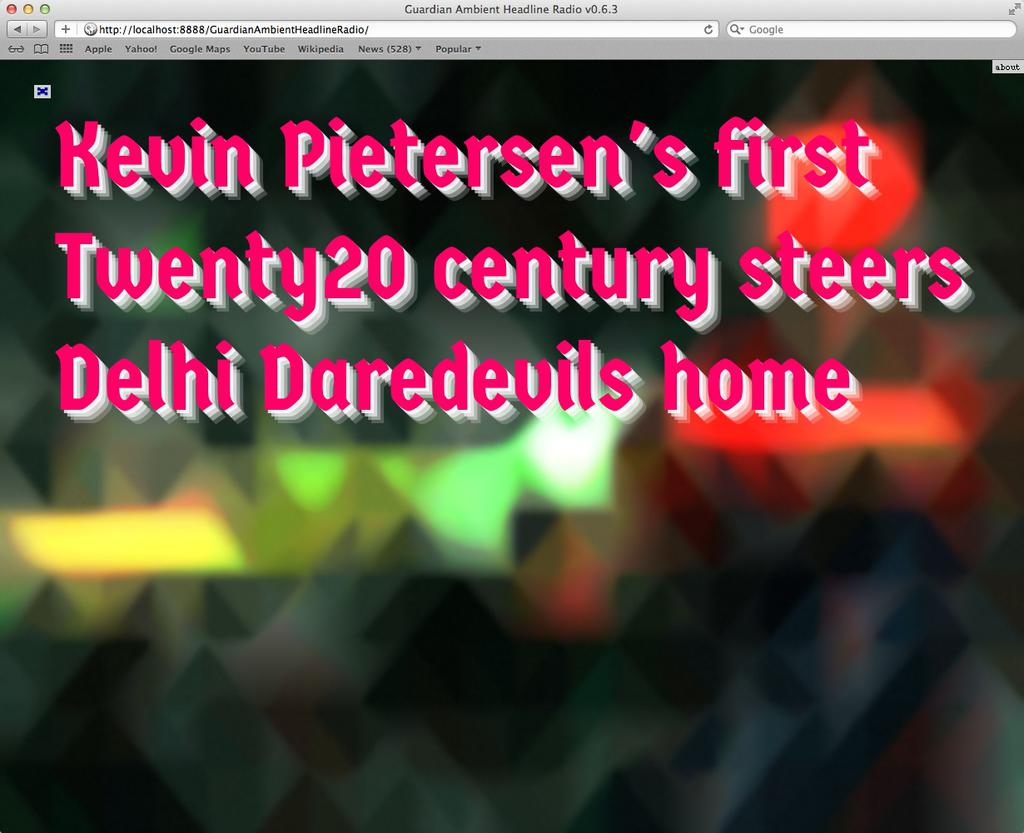

I've already covered the design in more detail over here: The pxl effect with javascript and canvas (and maths), the gist of it though is this...

Via the Guardian API, without an API key, you only have access to the thumbnails for each story, which are tiny 140x86px. I wanted to do something that could blow-up the image to a full screen in an interesting way, but didn't overshadow main objective of the project being about sound.

And so it turns the thumbnail into a somewhat abstract background, which you can still kind of tell what it is, but not always.

Then I figured it could also have a second function of giving you a general idea of how "fresh" a headline was, by adding some blur/glow filters over time. If the image is nice and crisp with sharp edges then it's new. If it's blurred then it's been around a while.

Yet another background signal of information, it looks a bit like this...

I'm using the Pixastic js library for all the heavy blur lifting (or at least until we get it a bit more native into webkit)

Once a second after two minutes it applies the following filters. The values are pretty small because otherwise the whole thing goes to mush in less than a minute :)

ct=$('#targetCanvas')[0];

Pixastic.process(ct, "glow", {amount:0.003,radius:3.0});

ct=$('#targetCanvas')[0];

Pixastic.process(ct, "noise", {mono:true,amount:0.01,strength:0.05});

ct=$('#targetCanvas')[0];

Pixastic.process(ct, "blurfast", {amount:0.04});

The end result is often very pleasing and a contrast to the original sharp image.

* * *

TECHNOLOGY

And there we have it, background image, ambient audio and text to speech all running on the client in javascript and modern technologies, i.e. HTML5, CSS3 when we get the image filters.

Running in the client is a lot more limiting but at the same time I don't need to tie up two laptops constantly running with 100s of pounds worth of software "broadcasting" out to the internet 24hours a day.

Although that does have a certain appeal. I'm going to see if I can get that down to one laptop.

* * *

THINGS I LEARNT ALONG THE WAY

The final implimentation turned out to be pretty quick and easy, it's just the year thinking about it and hunting out libraries that took the time :)

And during that time I've had a good look around Ableton Live, it no-longer make no-sense-at-all, which is nice. Same for Native Instruments stuff, lots of gorgeous synths I've not had the chance to dig into too much.

I also watched a whole bunch of videos over at MacProVideo, specifically:

I grabbed a years subscription, not sure I'd want to buy each lesson individually.

Open Sound Control is another fun thing I looked at, along with TouchOSC and the Lemur OSC controller mainly because I like the idea of controling stuff with futuristic looking touch surfaces...

Image from h e x l e r

Processing + MIDI & OSC, I've used processing for a while now but it was nice to drop in the oscP5 and proMidi libraries to have a quick play at sending messages back and forth. I didn't get to use them for this project, but knowing I can make Lemur running on the iPad talk to processing makes me very happy indeed. Now I just have to shoe horn it into a project.

I guess the one thing that I saw a hint of, but didn't really dig into was generating the ambient soundscapes with javascript. I've seen some interesting pieces here & there about generating WAVs on the fly (which is how the text-to-speech works), and bits & bobs around the audioData API. So at some point I may try and have a go at doing something with that.

Anyway, The END.

* * *