The pxl effect with javascript and canvas (and maths)

As part of a bigger project I wanted to generate an abstract background image/texture. There's obviously a huge number of ways to do this but due to a few constraints I put on myself the method I ended up with (described below) seemed like a good solution. First the constraints...

-

The project involves grabbing the latest news headline from the Guardian API, and because I want to run this in javascript from a

github hosted page[my own page] I don't want to use an API key that everyone will be able see. Which in turn means I don't have access to any of the images (you need an API key to get the media assets related to a story) other than the thumbnail. -

The thumbnail is tiny 140px x 84px.

-

Telling the browser to scale up the image without smoothing (see snippet below) gives it a nice mosaic effect, but the iPad ignores that and makes it look all blurred and shit anyway.

{ image-rendering:-moz-crisp-edges; image-rendering: -o-crisp-edges; image-rendering:-webkit-optimize-contrast; -ms-interpolation-mode:nearest-neighbor; } -

It has to be done in javascript, so no server-side image processing (although as you'll see later it does involve a small amount of server side stuff, but for a totally different reason).

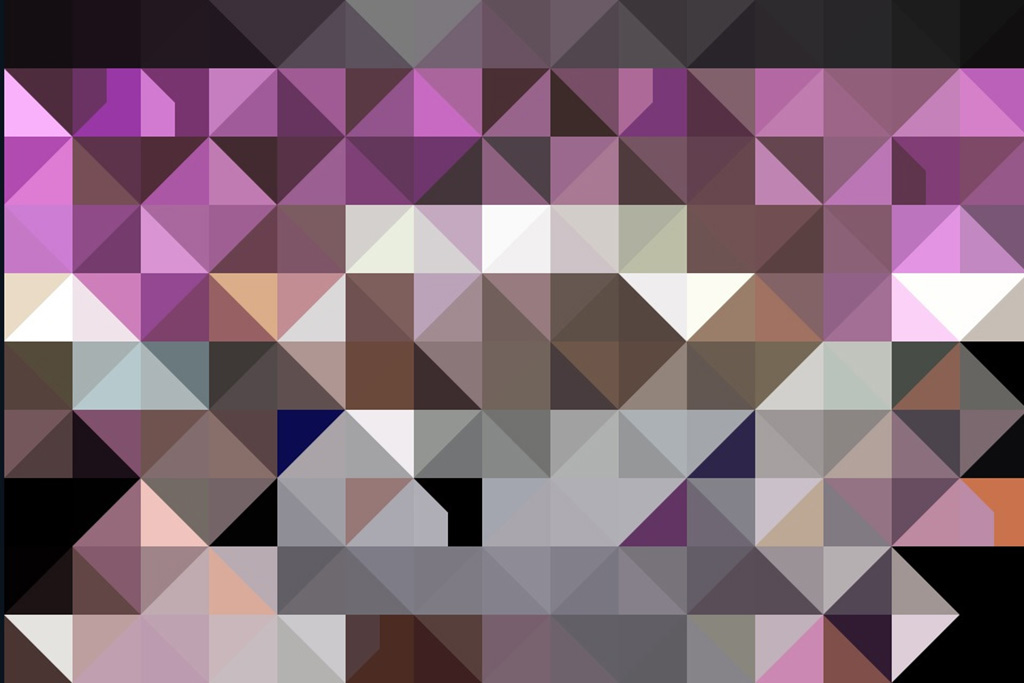

Recently the pxl app for iPhone has filtered through my friends. It allows you to apply a variety of abstract compositions to your photos, a popular one turns an image into a collection of triangles, rather like this...

...a general effect that should work well, and allow us to create a large background image out of a relatively small source image.

This seemed like a good solution, by doing something very similar I can now turn a thumbnail that actually relates to the latest headline (which is the thing we're actually after) into a fairly abstract and pleasing, but not too distracting background. All I needed to do was chop it up into triangles.

Method

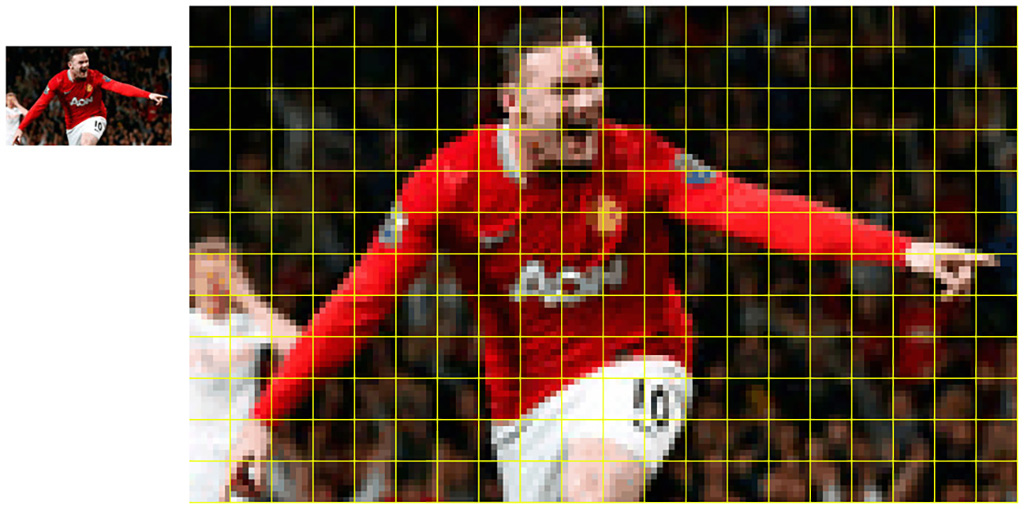

No idea if this was intentional, but the thumbnail from the guardian is divisible by 7 in both width & height, giving us a grid of 20 x 12 tiles, each tile 7 x7 pixels.

The next step was to look at each "tile", work out which way to split the tile, diagonally from top-left to bottom-right or top-right to bottom-left and the colours to use for each split.

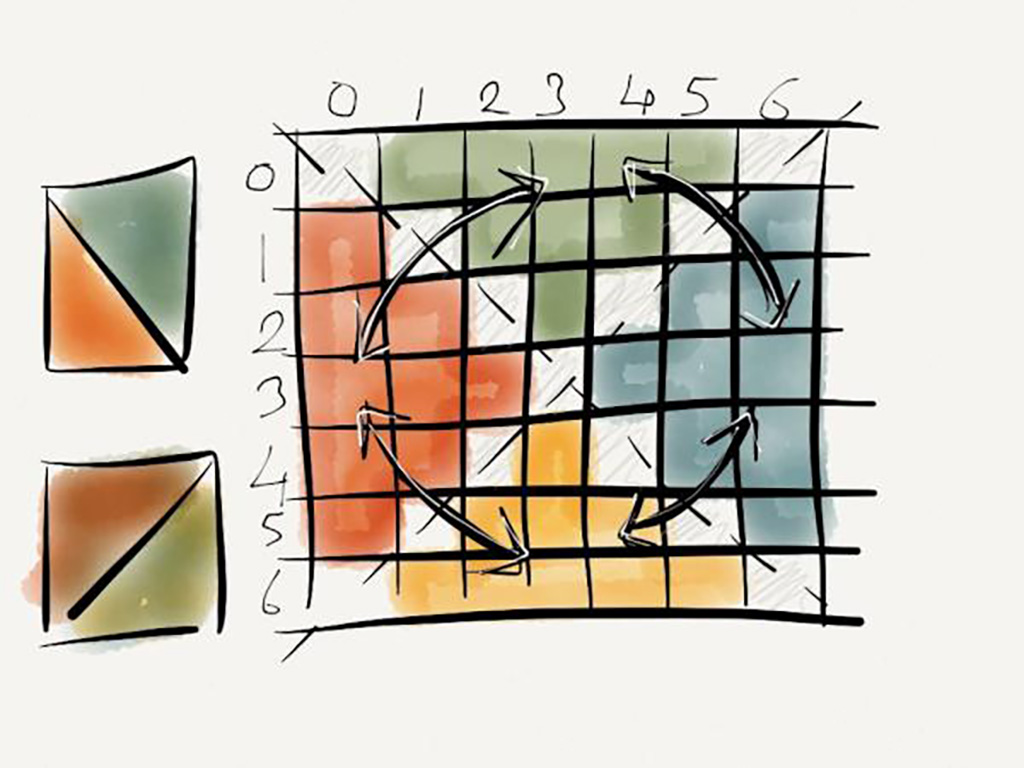

I decided the best way to do this was to divide the square up into quarters, top, left, right and bottom. Average the rgb values in each quarter and then work out if the top quarter was closer in colour to either the left or right quarter. If it was closer to the one on the left we'd split the tile that way, combining the top average colour with the left one, and the opposite if it was closer to the right quarter. A picture will probably explain it better.

You can see that each quarter contains 9 pixels, I didn't bother counting the pixels on the diagonals. So anyway, averaging the rgb values of the 9 pixels in each quarter, and then comparing them is what I did. In the above example the green is closer to the blue than the red (and the yellow is closer to the red than the blue) so we'd split top-left to bottom-right.

The code: which is here on GitHub, is a little bit specific to the 140 x 84 pixel size, and

grabs the pixel values long-hand. At some point I'll probably make it generic so that it can work with any

sized image divided down into any number of tiles. But just to get it working it seemed easier to hardcode some of

the values.

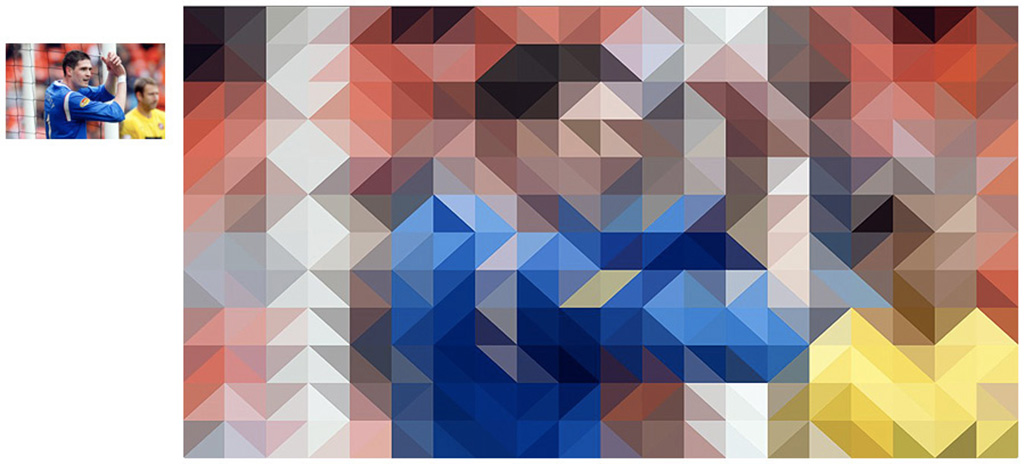

The end result is something like this...

I also dropped in some meta-tags to make the whole thing work a bit better on the iPad/iPhone and GoogleTV, just because I wanted some nice abstract art in my living room :)

<meta name="gtv-autozoom" content="off" />

<meta name=apple-mobile-web-app-capable content=yes />

<meta name=apple-mobile-web-app-status-bar-style content=black-translucent />

<meta name =viewport content=initial-scale=1.0 />

<meta name=viewport content=width=device-width />

<meta name=viewport content=user-scalable=no />Weirdly the project is really supposed to be about text-to-speech and music, with the image background as just an extra bit of colourfulness. But now it's here I may fork the code to keep the image part as a snapshot for other things, or at least so it doesn't get bogged down in all the other stuff.

Notes about loading images into canvas from remote servers

Again, if I was running this on something other than GitHub hosted pages I could do a bunch of this stuff on the server side and not worry about it, but instead I have to deal with loading an image from a remote server.

In an ideal world the guardian would be using CORS and allow canvas to access the image data from the image, but boo, not yet. And so a proxy server of sorts has to be used to deliver the image to javascript. We can't put anything on GitHub to pull the image to the server so we still have to use something on a remote server.

I put this up on Google AppEngine (which is my general weapon of choice and experimented with python 2.7 on there at the same time)...

import json

import urllib2

import cStringIO

import base64

import webapp2

class MainPage(webapp2.RequestHandler):

def get(self):

remote = urllib2.urlopen(

urllib2.unquote(

'http://static.guim.co.uk/sys-images/%s'

) % self.request.get('img')

)

img = cStringIO.StringIO(remote.read())

rtnimg = base64.b64encode(img.getvalue())

type_prefix = "data:image/jpg;base64,"

data = {

"data": type_prefix + rtnimg

}

self.response.headers['Content-Type'] = 'application/json'

if 'callback' in self.request.arguments():

data = '%s(%s)' % (

self.request.get('callback'), json.dumps(data)

)

self.response.out.write(data)

else:

self.response.out.write(json.dumps(data))

app = webapp2.WSGIApplication(

[('/img_to_json', MainPage)], debug=True

)

...which reads in an image from the guardian, converts it to base64 and chucks it out in a json response.

Meanwhile, on the client side, jquery calls the endpoint passing over the second half of the image URL, gets the json back and sets the source of an image to the data:image/jpg;base64 value. Which now doesn't suffer from cross-domain security and we can get access to the image data for shoving onto a canvas object.

It's a bit of a hack, abet a fun one, but hopefully greater implementation of CORS will remove the need for any server side stuff, at which point I can get rid of that part out of the code altogether.

* * *